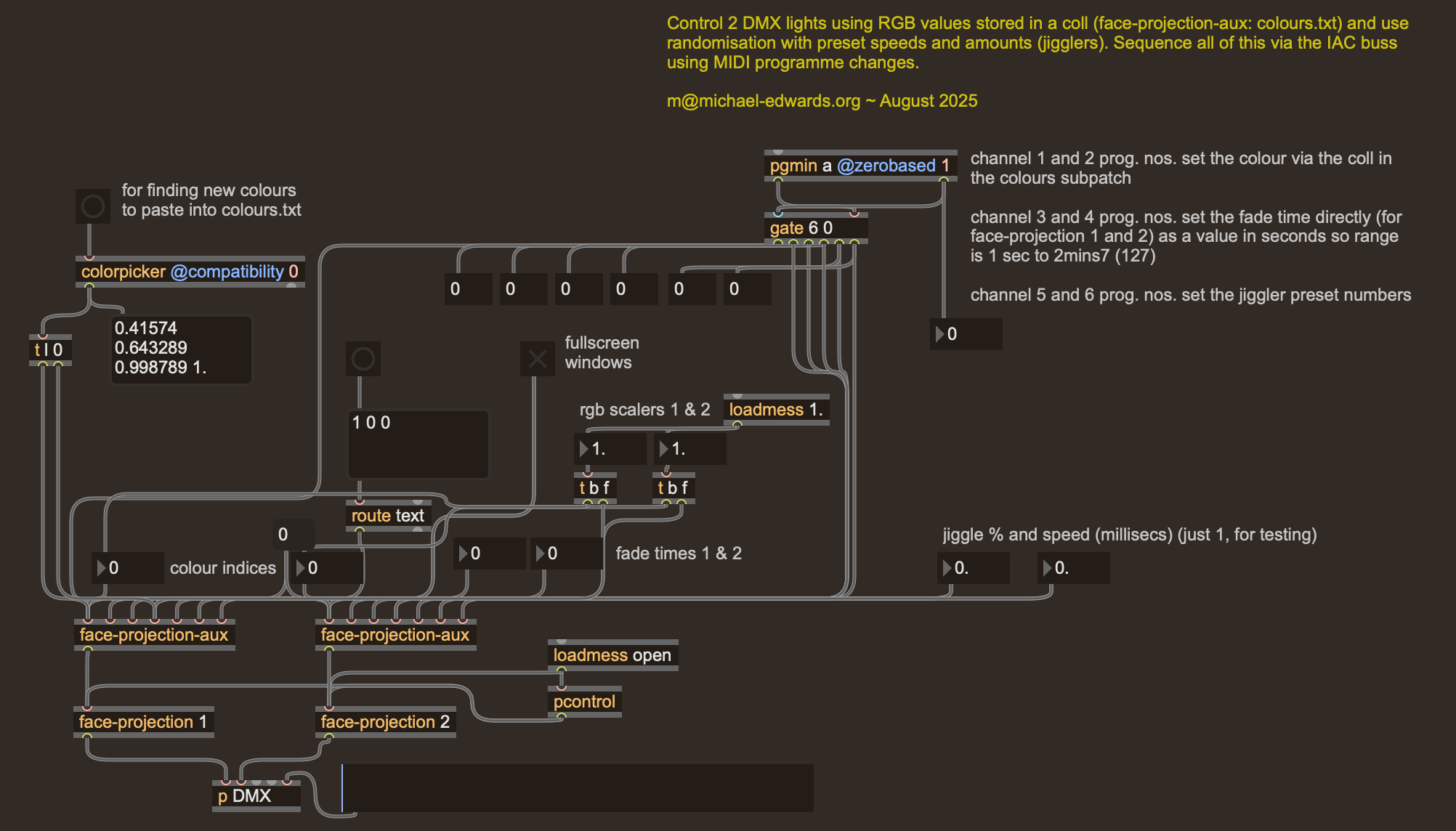

My 68-minute immersive audio piece lusted fleeting can be performed with or without 1-6 live speakers and sequenced lighting effects. For the performance Henrique Portovedo and I did in Portugal on September 5th 2025, I tried out DMX programming to synchronise lighting changes with the spoken text. For now, two lights (or groups) suffice and these are both in 4-channel mode, for Red-Green-Blue+White 8-bit values. As the live performance is driven by reaper but the DMX programming was done in MaxMSP, I added MIDI programme changes at specific points in reaper to select colours from a nine-colour palette in MaxMSP that was created in collaboration with Karin Schistek, my favourite synaesthete. She listened to the music with me and determined the selections based on her very clear colour association with the music as it developed. Two MIDI channels were used to directly reference the colours for both lights independently, as indexed in a simple table, and two further channels were associated with the transition times to the colour target (the given programme number being directly translated into a transition time in seconds). Very simple but effective enough for my purposes, and of course this solution meant jumping around in the piece during rehearsal was not an issue, as a stepping procedure was not in place.

I also added supertitles in MaxMSP. There are 179 text snippets read out during the performance. As I’d already had enough of entering into reaper, by hand, every programme change needed for the lighting effects, this time I looked to chatGPT for a way of scripting the connection between spoken text snippet and MaxMSP supertitle projection. From the get-go, I’d written Lisp code to place slides into reaper, so that the speakers are prompted to read their text at the exact point in the piece where it occurs in the fully-produced ‘album’ version (i.e. the one with my recorded voice speaking the texts—see this for more details). reaper can have video tracks but at some point I discovered that these don’t have to contain actual videos, rather they can simply contain PNG images and they’ll be displayed in the video window according to their placement on the timeline. So this is perfect for lusted fleeting performances. As the PNGs were already placed exactly where necessary, it was a ‘simple’ case of adding the index1 to the text snippet, again stored in a MaxMSP table, at the start of every new PNG image in reaper. This being more closely linked to existing events than the lighting changes led to a chatGPT-driven automation process. reaper can be scripted in Lua but I was surprised that chatGPT knew so much about this. It was quite simple to get ascending programme changes added to one track at the start point of every PNG on another track. Much simpler than I imagined in fact, and far quicker than typing them all in by hand.

I should of course mention that in order to send data to the DMX lights, I used the serial object in MaxMSP to communicate with the Enttec DMX USB Pro. Sadly I didn’t have any luck with the cheaper Open DMX USB box from the same company. Thanks to Christos Michalakos for sharing his test patch to get me started with this.

- I need two MIDI channels for these indices, as MIDI programme changes are, like all MIDI data, limited to 7 bits, or a maximum of 128 values, whereas we need 179 indices. ↩

Leave a Reply