vivos as a noun means alive in Spanish and Portuguese; living in Latin; but also tips its hat at the 1980 computer music landmark Mortuos Plango, Vivos Voco by Jonathan Harvey.

My vivos software aims to create flexible, lively, multi-layered, and varied real-time sound processing. Its focus is upon unattended processing driven by audio analysis, meaning, here, that the triggering and control of sound processing techniques is undertaken by the coupling of continually analysed and updated incoming audio characteristics to various processing parameters. So it’s in the spirit, at least, of Agostino di Scipio’s sound is the interface though allows for intervention where desired, at the very least in terms of amplitude control of the various processes employed. Both video examples presented in this post however were realised with no intervention whatsoever once the input sound and analysis processes were started. The one above used Sciarrino’s Notturni Brillanti number 2: Scorrevole e Animato (for viola) as input.

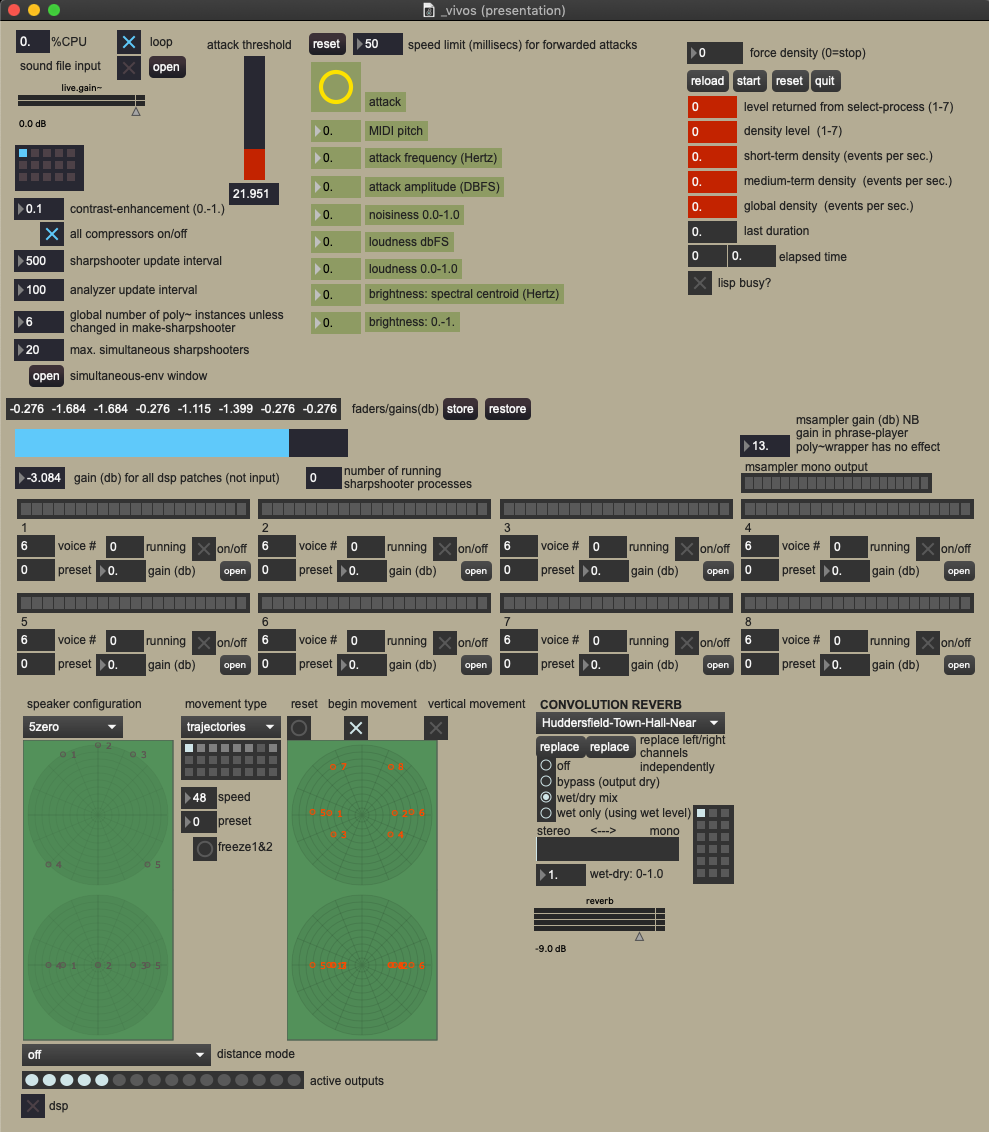

vivos is accomplished using a combination of MaxMSP patches and Common Lisp software. These two programming environments interact seamlessly via UDP network communication so that calling Lisp routines from MaxMSP patches is lightweight and trivially easy to accomplish: most people would notice no difference or time lag between connecting one MaxMSP object to another and stepping out of MaxMSP into Lisp and back.

their faces on fire was the model from which I extended this new system. This piece from 2014 used a Common Lisp object of my design (sharpshooter) to trigger specific audio processes. My reason for moving into Lisp when essentially working with MaxMSP was similar to earlier projects that used Javascript within MaxMSP: Max is great for interfacing with hardware and creating performance GUIs; it’s great for processing, analysing, and synthesising sound; but it’s not so great when working with anything other than the simplest of data structures, even lists, and isn’t truly object-oriented.

In my Common Lisp software slippery chicken I’ve developed a lot of object-oriented tools for composition and the generation or manipulation of musical data. So coupling MaxMSP and Common Lisp is for me not just an obvious thing to do but a sensible thing to do: choose the right programming environment for the job at hand rather than forcing everything into one box.

The code from their faces on fire was developed much further in March of 2020 to incorporate a more sophisticated process-selection algorithm based on the analysis and categorisation of incoming real-time audio, e.g. from one or more musical instruments. Its intended application is in a new piece of mine for three violas and electronics to be performed next year, but I think it will play a role in quite a few pieces in the future.

how it works

Essentially, incoming audio is analysed for various characteristics such as note onsets with amplitude and pitch information, noisiness, loudness, and brightness. These constantly updated data streams can be used to trigger and steer any arbitrary processing parameters.

The base system is actually blind to the type and details of the processing performed: processing patches are supplied in the form of MaxMSP abstractions which are run in parallel with themselves and other such processes (in MaxMSPese: via poly~). There are some basic requirements for these patches, such as responding to (or at least explicitly choosing to ignore) detected attacks and preset numbers, but they don’t necessarily have to process audio input or even provide audio output.

So, strangely enough for a MaxMSP project, you don’t even define in the main patch which processing abstractions should run, rather, you define merely the number of processors via placeholders (MaxMSP bpatchers). The abstractions to be loaded in the placeholders are defined in the initialisation of a Common Lisp sharpshooter object:

(in-package :sharpshooter)

(defparameter +vivos-sharpshooter+

(make-sharpshooter '((1 (stretch transp grandly transp))

(2 (stretch addsyn grandly stretch))

(3 (transp grandly addsyn

phrase-player))

(4 (phrase-player transp grandly addsyn stretch))

(5 (grandly addsyn 2dwavesynth stretch gran))

(6 (2dwavesynth phrase-player grandly stretch addsyn))

(7 ((phrase-player :end-time 45) long-sndfiles

stretch 2dwavesynth grandly addsyn)))

:start-density 4

:patch-instances '(phrase-player 1)

:patch-defaults '((phrase-player (:level 6))

(grandly (:level 7)))))

Here we can see lists of processes, with each name (stretch, grandly, etc.) referring to patches of exactly that file name (plus the .maxpat extension on Apple computers). These are the patches that will automatically be loaded when MaxMSP tells Lisp to evaluate this code at startup.

What we also see here is that the lists are numbered from 1-7. These relate directly to one of the main design features of vivos, namely, that there’s a global concept of activity density of the incoming, analysed audio, with 1 being the lowest and 7 being the highest densities. These densities arise out of the note detection and timing analysis done in the Common Lisp code. Yes, Lisp does the timing, not MaxMSP as you might reasonably imagine. Lisp code also saves phrase information in the form of lists of pitches, amplitudes, and durations, with a phrase being flexibly but simply defined as a sequence of a specific number of notes, an elapsed time since the first attack after the previous phrase, or some other user-provided mechanism for triggering the phrase collection.

The notion of activity or note density is provided at three levels: short-term (the last ten seconds), medium-term (the last minute), and long-term or global values. The thresholds to short-term and medium-term values can of course be tweaked. All three are available to audio processors via simple values that measure the number of detected events per second. The density level of 1-7 arises out of the short-term value via the following mapping (currently, and this may change as I work more with the system):

level 1: 0.25 events per second (or 1 every 4 seconds)

level 2: 0.5 events per sec. (1 every 2 seconds)

level 3: 1 per second

level 4: 4 per second

level 5: 8 per second

level 6: 13 per second

level 7: more than 13 per second

These density levels determine the likelihood of a processor being triggered

when using a deterministic rather than pseudo-random algorithm to decide. Incoming attacks detected by the audio analysis system are forwarded to a Lisp method which determines whether the current attack should start a process. The higher the density level, the more likely a process will start. The decision process can be supplied by the user when initialising the sharpshooter object but the default method takes more into account than just the current density level: whether there was a pause of more than a second between the current and last attack will increase likelihood, as will whether the short-term density in events-per-second has increased by more than 0.3. However in each and every case, not every attack will trigger a process.

process selection

The selection of processes within each of the seven density levels is determined by slippery chicken’s procession algorithm. The results of this are

mirrored and selected from, sequentially and circularly, each time the next process is required for any given level. In order for the procession algorithm to work well, we’ll need at least four unique processes for each level but less will also work, albeit with process repetition. Processes may also explicitly occur twice or more in each level, so that they are used more often than others. On the other hand, one way to reduce the number of occurrences of a particular process is to pass a number between 1 (use very little) and 9 (use almost every time) to the :level slot in :patch-defaults (see the code above). This option would use the deterministic decision algorithm a second time to decide whether to actually trigger this exact process or not, even after the main method has determined that a process should be started. Yet another way to reduce the use of a process is to reduce the number of :patch-instances (also used in the code above) in MaxMSP. Per default, MaxMSP will run up to six simultaneous instances of any given process (this global default can be changed) but this can be reduced or even increased for any given process when the Lisp sharpshooter object is initialised.

presets

Along with the determination of which, if any processor to trigger, the selection method also chooses—again via the procession algorithm—which processor preset to use. A preset will most likely be a snapshot of the various and perhaps constantly varying parameters of an essentially fixed sound modification or synthesis process. Any process is free to ignore or remap the automatically-chosen preset, but the basic concept is that each MaxMSP processor should be at least theoretically deployable at any given density level, and so when developing the processor we provide presets for each of the seven levels. In fact, the idea is to have seven presets per level, which means a total of 49 presets. That may sound like a lot but these can and probably will be slight modifications of each other, varied to provide sonic freshness (as we know, many processes have a very short shelf life). As each row of seven presets reflects an increasingly higher density level, the character of each is intended to reflect this by, e.g., increasing the strength of the effect, its duration, loudness, brightness, depth, distortion, etc. But of course nothing forces the programmer to actually take this approach.

It is in fact trivial to experienced MaxMSP programmers to add new processes along with their presets, as all that is needed is the modification of a MaxMSP template, incorporating perhaps the various sonic characteristics of the analysed sound and mapping this to the processing/synthesis parameters. For testing purposes and for the capture of the two video files presented here, I used the processes named in the code above to accomplish time stretching; transposition/harmonising; granular delay and granular synthesis in general; additive synthesis driven by harmonic analysis of the incoming audio; sample triggering using various percussive samples and the timing of the stored phrases’ data extracted, again, from the incoming sound analysis; 2-dimensional wavetable synthesis; and the triggering of quite long soundfiles, intended as background ‘pads’.

This second demonstration uses Sciarrino’s Notturni Brillanti No. 1 (Di volo) as the test input.

Acknowledgements

This project uses the very excellent Ambisonics externals from ICST Zürich as well as the similarly superb multiconvolve~ external object from Huddersfield’s HISS Tools and here in particular indispensable analyzer~ object from Tristan Jehan.

Leave a Reply